How does the operating system load my process and libraries into memory?

It maps the executable and library file contents into the address space of the process.If many programs only need read-access to the same file (e.g. /bin/bash, the C library) then the same physical memory can be shared between multiple processes.

- Device driver memory mapping¶ Memory mapping is one of the most interesting features of a Unix system. From a driver’s point of view, the memory-mapping facility allows direct memory access to a user space device. To assign a mmap operation to a driver, the mmap field of the device driver’s struct fileoperations must be implemented.

- Linux does read-ahead for memory-mapped files, but I'm not sure about Windows. Finally, it's possible to bypass the page cache using ODIRECT in Linux or NOBUFFERING in Windows, something database software often does. A file mapping may be private or shared.

- Sep 12, 2018 Sharing of files - memory mapped files are particularly convenient when the same data is shared between multiple processes. Lower memory pressure - when resources run tight, the kernel can easily drop data from the disk cache out of main memory and into backing disk storage (clean pages don't even need to be written back to disk).

The same mechanism can be used by programs to directly map files into memory.

How do I map a file into memory?

Mar 30, 2017 Memory-Mapped Files.; 9 minutes to read +8; In this article. A memory-mapped file contains the contents of a file in virtual memory. This mapping between a file and memory space enables an application, including multiple processes, to modify the file by reading and writing directly to the memory. A memory-mapped file is a segment of virtual memory that has been assigned a direct byte-for-byte correlation with some portion of a file or file-like resource. This resource is typically a file that is physically present on disk, but can also be a device, shared memory object, or other resource that the operating system can reference through a file descriptor.

A simple program to map a file into memory is shown below. The key points to notice are:

mmaprequires a file descriptor, so we need toopenthe file firstmmaponly works onlseekable file descriptors, i.e. 'true' files, not pipes or sockets- We seek to our desired size and write one byte to ensure that the file is sufficient length (failing to do so causes your program to receive SIGBUS upon trying to access the file).

ftruncatewould also work. - When finished, we call

munmapto unmap the file from memory

This example also shows the preprocessor constants 'LINE' and 'FILE' that hold the current line number and filename of the file currently being compiled.

The contents of our binary file can be listed using hexdump

The careful reader may notice that our integers were written in least-significant-byte format (because that is the endianness of the CPU) and that we allocated a file that is one byte too many!

The PROT_READ | PROT_WRITE options specify the virtual memory protection. The option PROT_EXEC (not used here) can be set to allow CPU execution of instructions in memory (e.g. this would be useful if you mapped an executable or library).

Difference between read + write and mmap

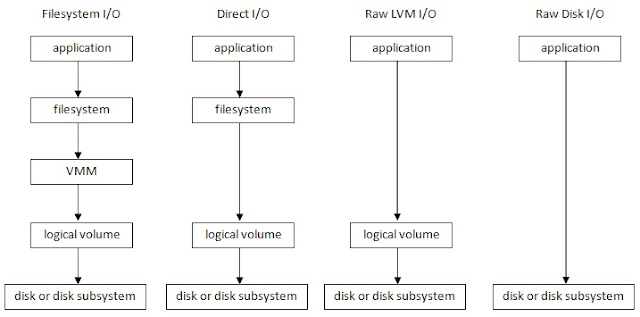

On Linux, there are more similarities between these approaches than differences. In both cases, the file is actually loaded into the disk cache before reads or writes occur, and writes are first performed directly on pages in the disk cache rather than on the hard disk. The operating system will synchronize the disk cache with disk eventually; you can use sync (or its variants) to request that it do so immediately.

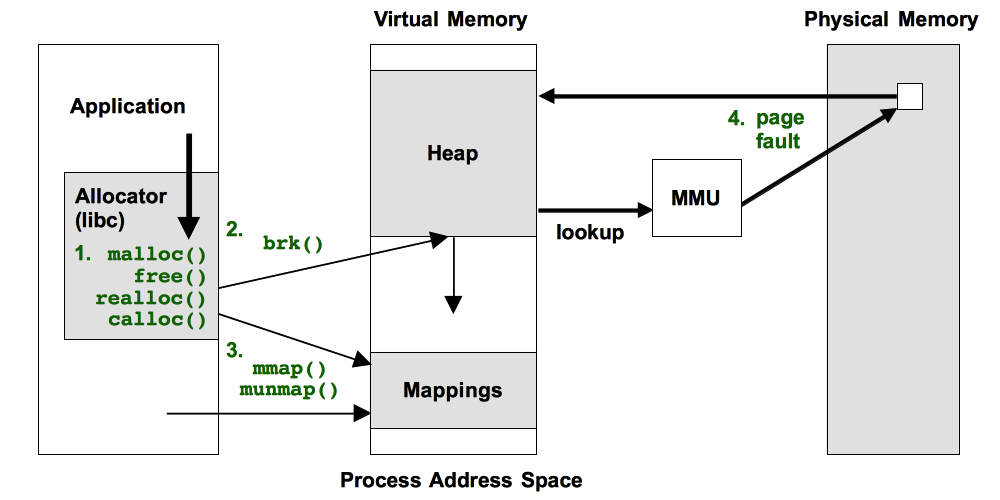

However, mmap allows your process to directly access the physical memory stored in the page cache. It does this by mapping the process page table entries to the same page frames found in the disk cache. read, on the other hand, must first load data from disk to the page cache, and then copy over data from the page cache to a user-space buffer (write does the same, but in the opposite direction).

On the downside, creating this mapping between the process address space and the page cache requires the process to create many new page tables and leads to additional (minor) page faults upon first-time access of a file page.

Memory Mapped File Linux Windows 10

What are the advantages of memory mapping a file

For many applications the main advantages are:

- Simplified coding - the file data is immediately available as if it were all in main memory. No need to parse the incoming data in chunks and store it in new memory structures like buffers.

- Sharing of files - memory mapped files are particularly convenient when the same data is shared between multiple processes.

- Lower memory pressure - when resources run tight, the kernel can easily drop data from the disk cache out of main memory and into backing disk storage (clean pages don't even need to be written back to disk). On the other hand, loading large files into buffers will eventually force the kernel to store those pages (even clean ones) in swap space, which may run out.

Note for simple sequential processing, memory mapped files are not necessarily faster than standard 'stream-based' approaches of read, fscanf, etc., due primarily to minor page faults and the fact that loading data from disk to the page cache is a much tighter performance bottleneck than copying from one buffer to another.

Memory Mapped File Linux Example

How do I share memory between a parent and child process?

Easy - Use mmap without a file - just specify the MAP_ANONYMOUS and MAP_SHARED options!

Can I use shared memory for IPC ?

Yes! As a simple example you could reserve just a few bytes and change the value in shared memory when you want the child process to quit. Sharing anonymous memory is a very efficient form of inter-process communication because there is no copying, system call, or disk-access overhead - the two processes literally share the same physical frame of main memory.

On the other hand, shared memory, like multithreading, creates room for data races. Processes that share writable memory might need to use synchronization primitives like mutexes to prevent these from happening.